Improving Object Detection via Local-global Contrastive Learning

Abstract

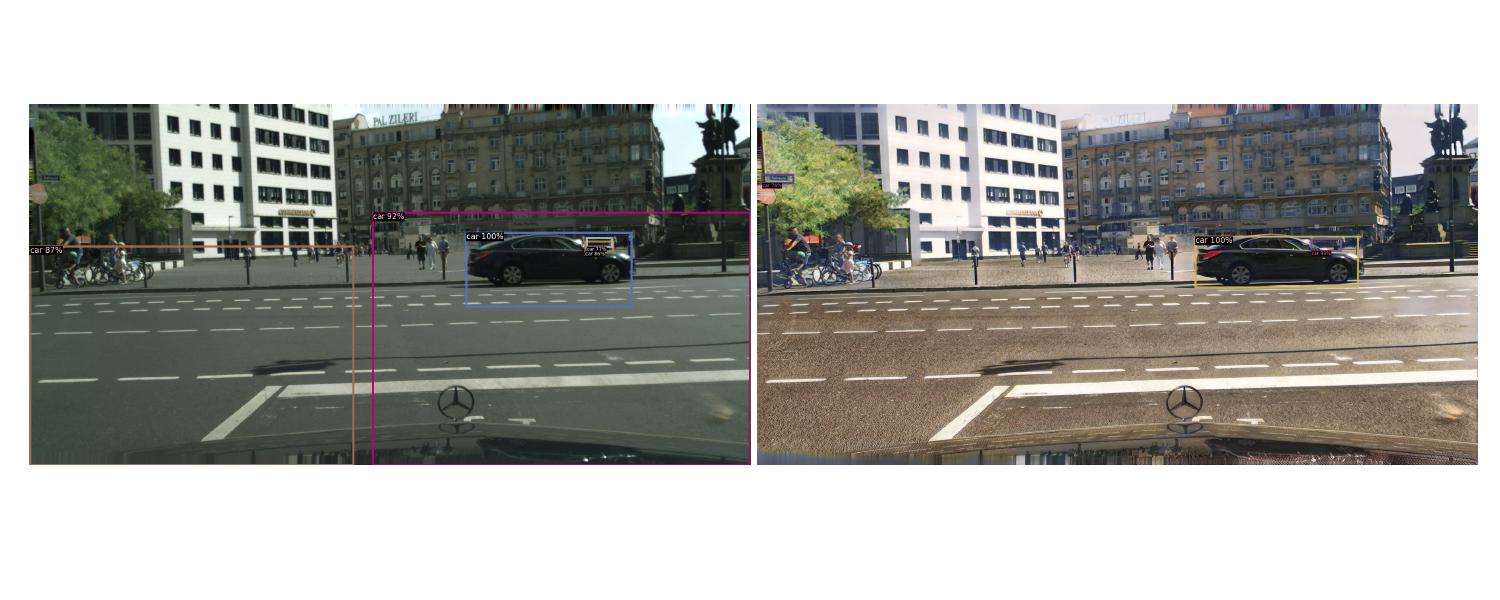

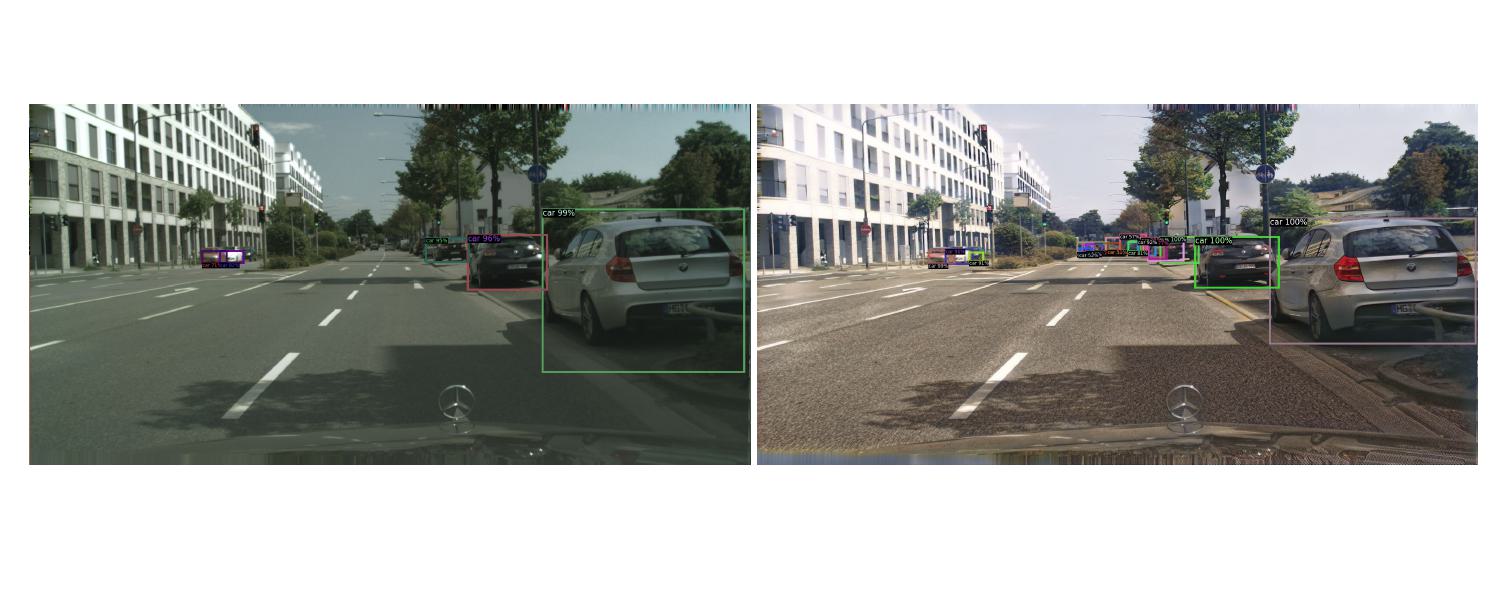

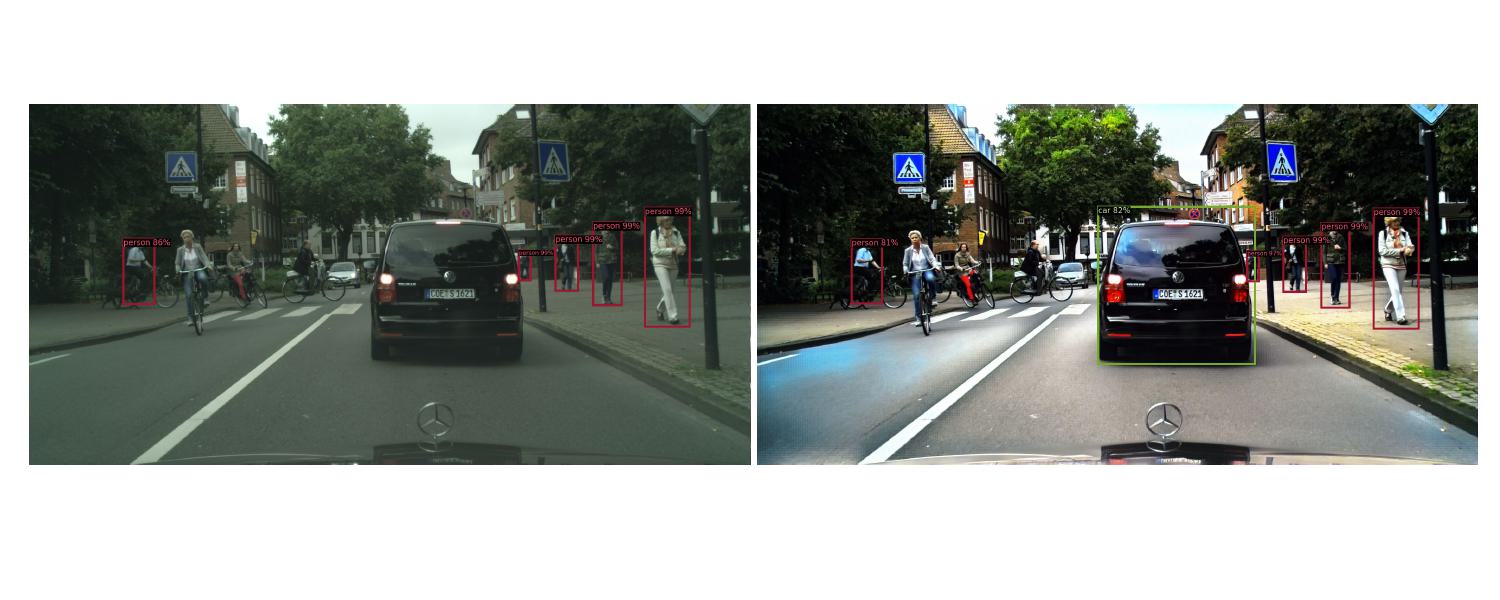

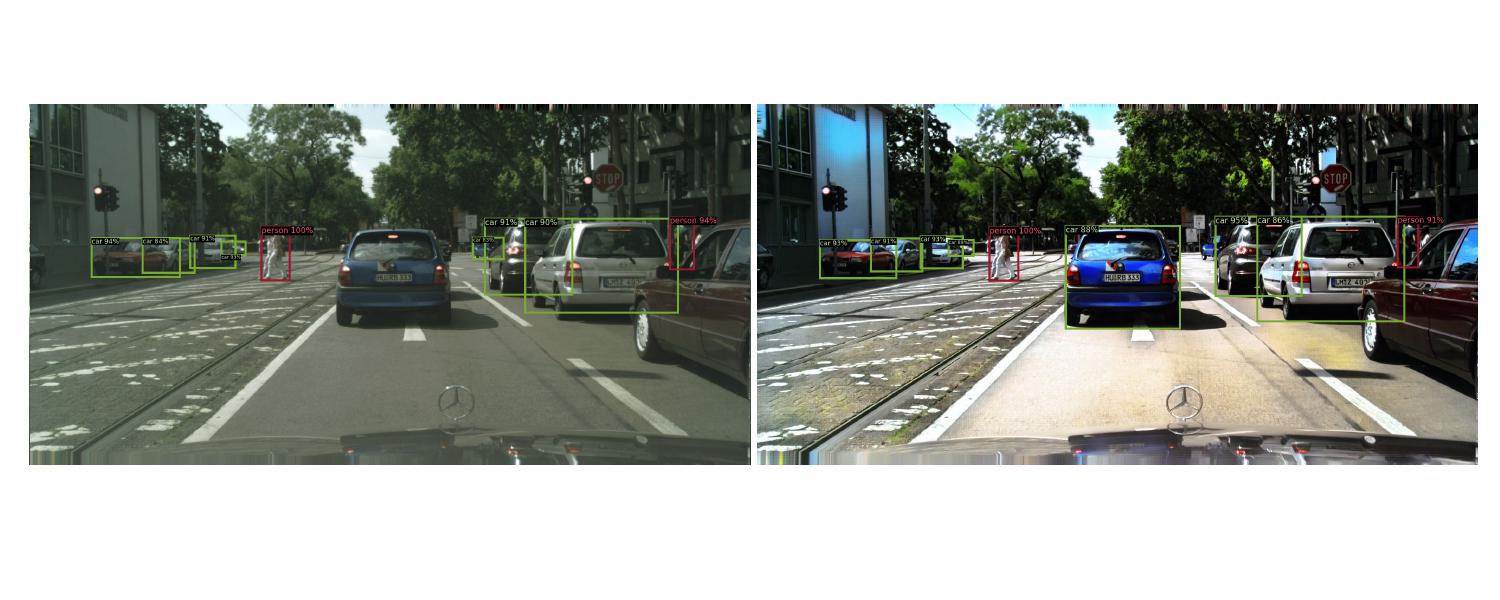

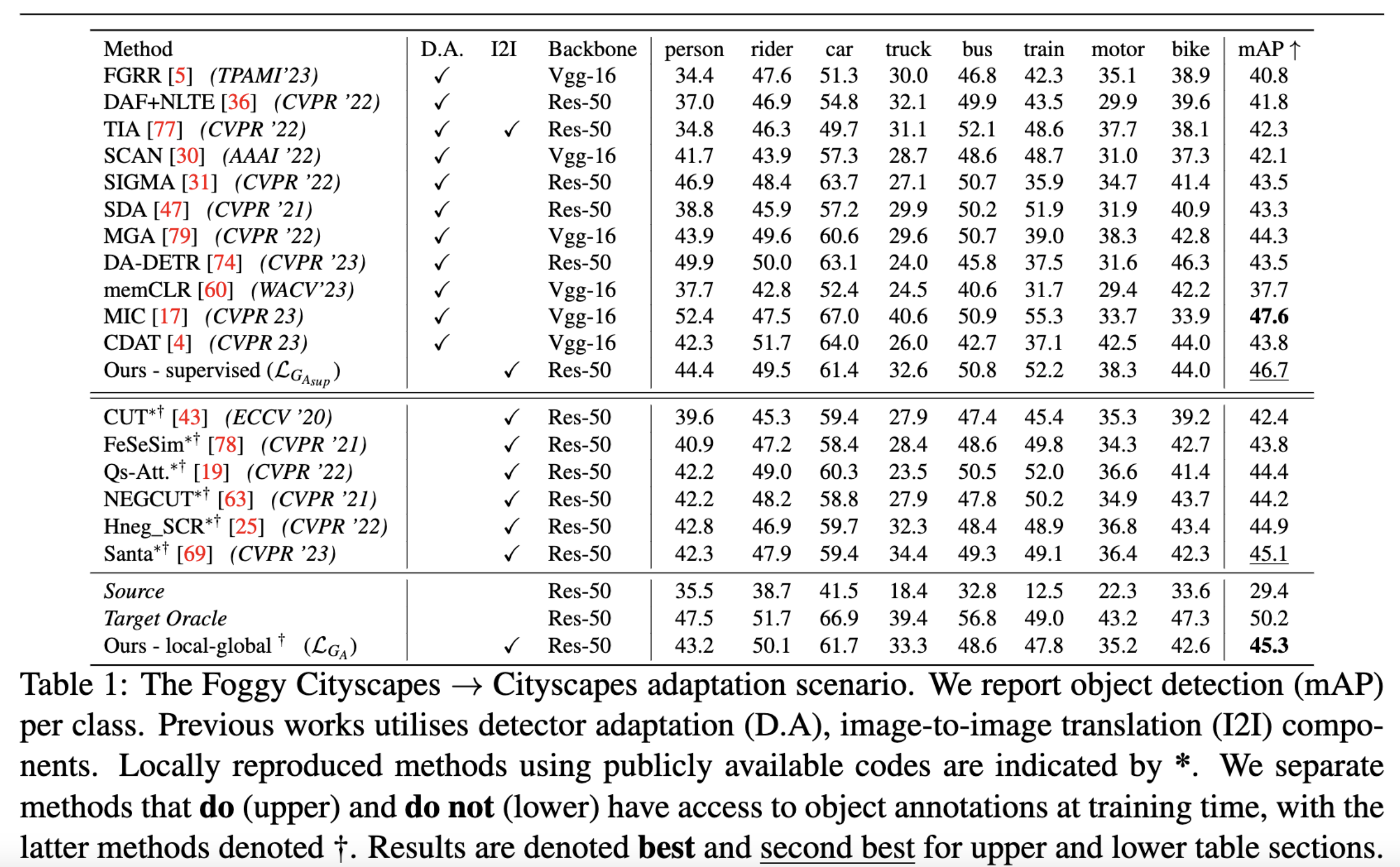

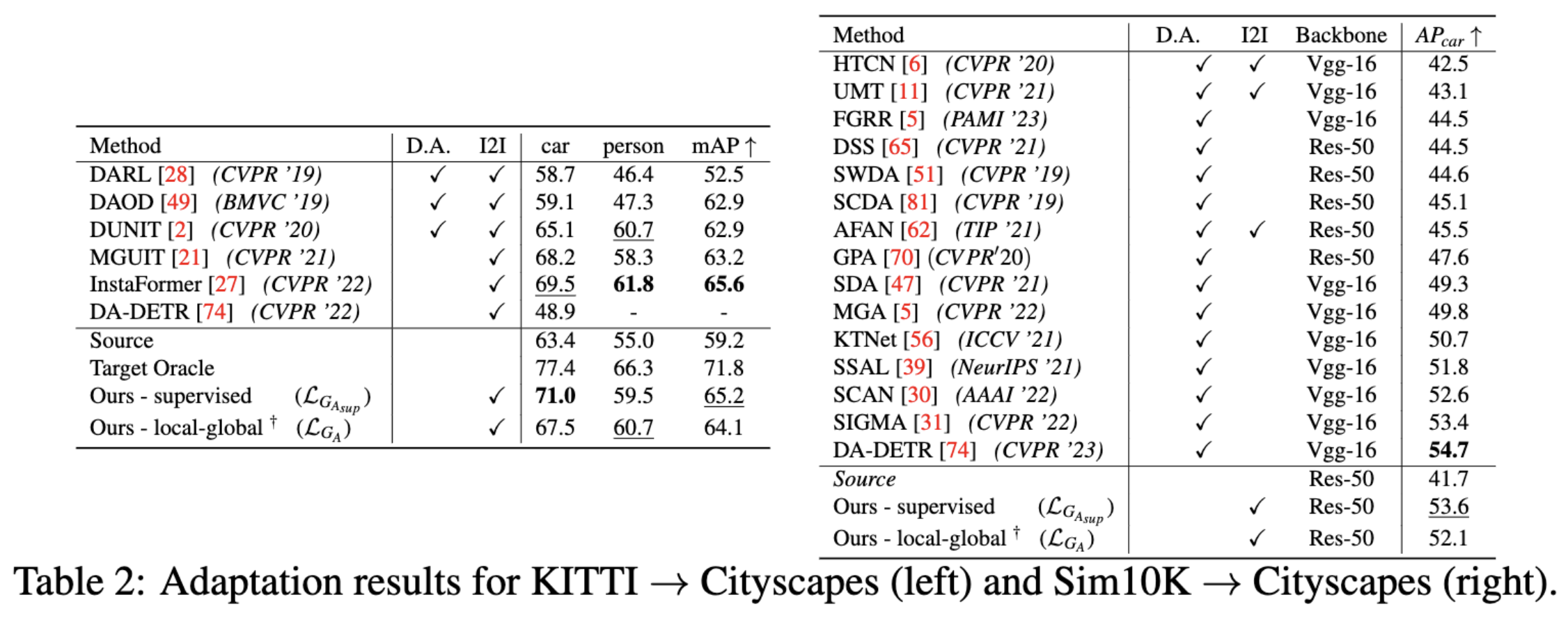

Visual domain gaps often impact object detection performance. Image-to-image translation can mitigate this effect, where contrastive approaches enable learning of the image-to-image mapping under unsupervised regimes. However, existing methods often fail to handle content-rich scenes with multiple object instances, which manifests in unsatisfactory detection performance. Sensitivity to such instance-level content is typically only gained through object annotations, which can be expensive to obtain. Towards addressing this issue, we present a novel image-to-image translation method that specifically itargets cross-domain object detection. We formulate our approach as a contrastive learning framework with an inductive prior that optimises the appearance of object instances through spatial attention masks, implicitly delineating the scene into foreground regions associated with the target object instances and background non-object regions. Instead of relying on object annotations to explicitly account for object instances during translation, our approach learns to represent objects by contrasting local-global information. This affords investigation of an under-explored challenge: obtaining performant detection, under domain shifts, without relying on object annotations nor detector model fine-tuning. We experiment with multiple cross-domain object detection settings across three challenging benchmarks and report state-of-the-art performance.

Method

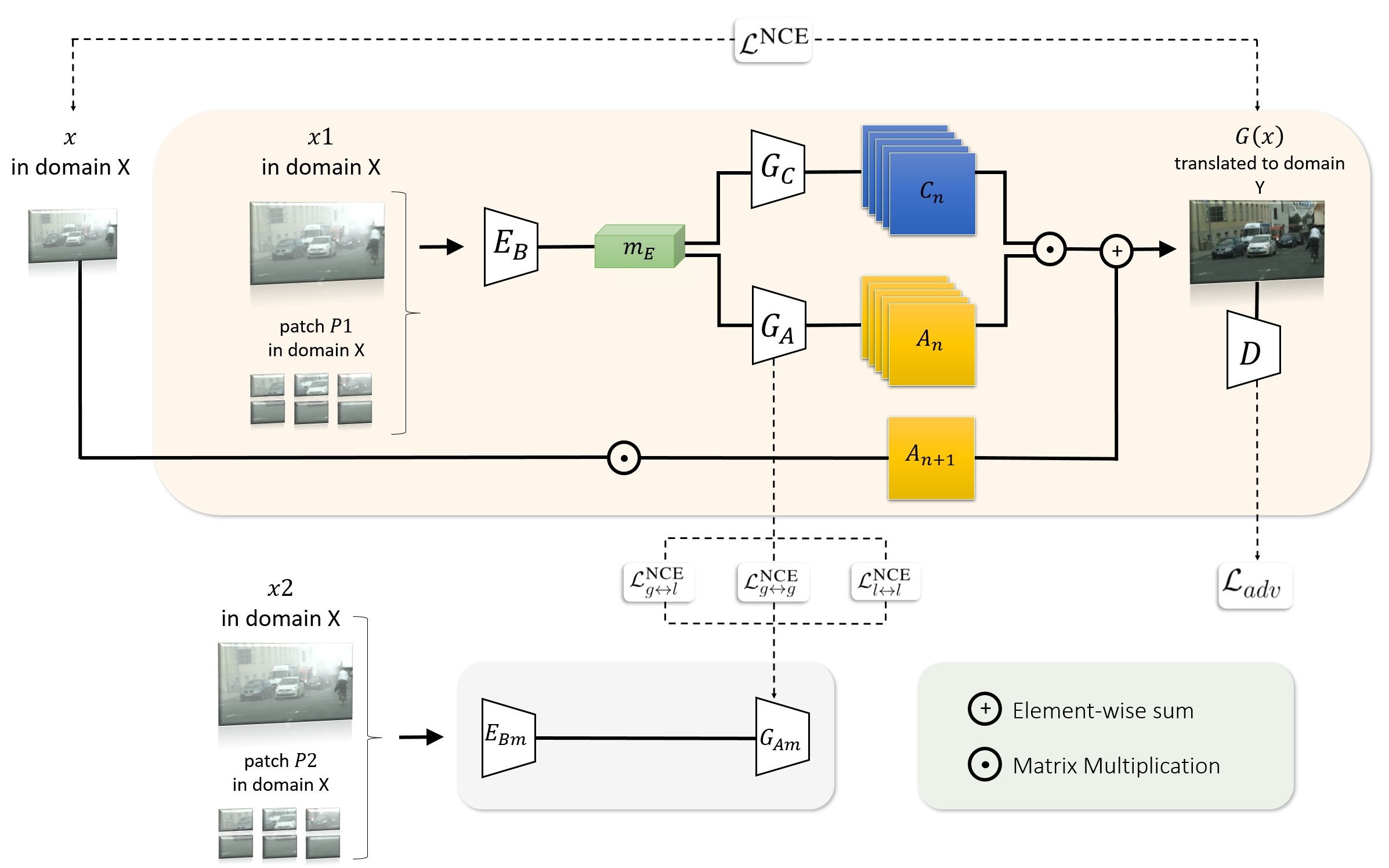

Model architecture

We introduce an inductive prior that optimises object instance appearance through spatial attention masks, effectively disentangling scenes into background and foreground regions. We adopt an encoder-decoder model where the encoder \(E_B\) acts as a feature extractor, generating an image representation of lower dimensionality. We decompose our decoder into two components: a content generator \(G_C\), producing a set of \(n\) content maps \( \{C_t \mid t \in [0, n-1]\} \), and an attention generator \(G_A\) producing a set of \(n+1\) attention maps \( \{A_t \mid t \in [0, n]\} \). The translated image \(G(\mathbf{x})\) is recovered as:

\[ \begin{equation} G(\mathbf{x})=\sum_{t=1}^{n} \underbrace{(C^{t} \odot A^{t})}_\textrm{foreground } + \underbrace{(x \odot A^{n+1})}_\textrm{background}. \label{eq:fb} \end{equation} \]

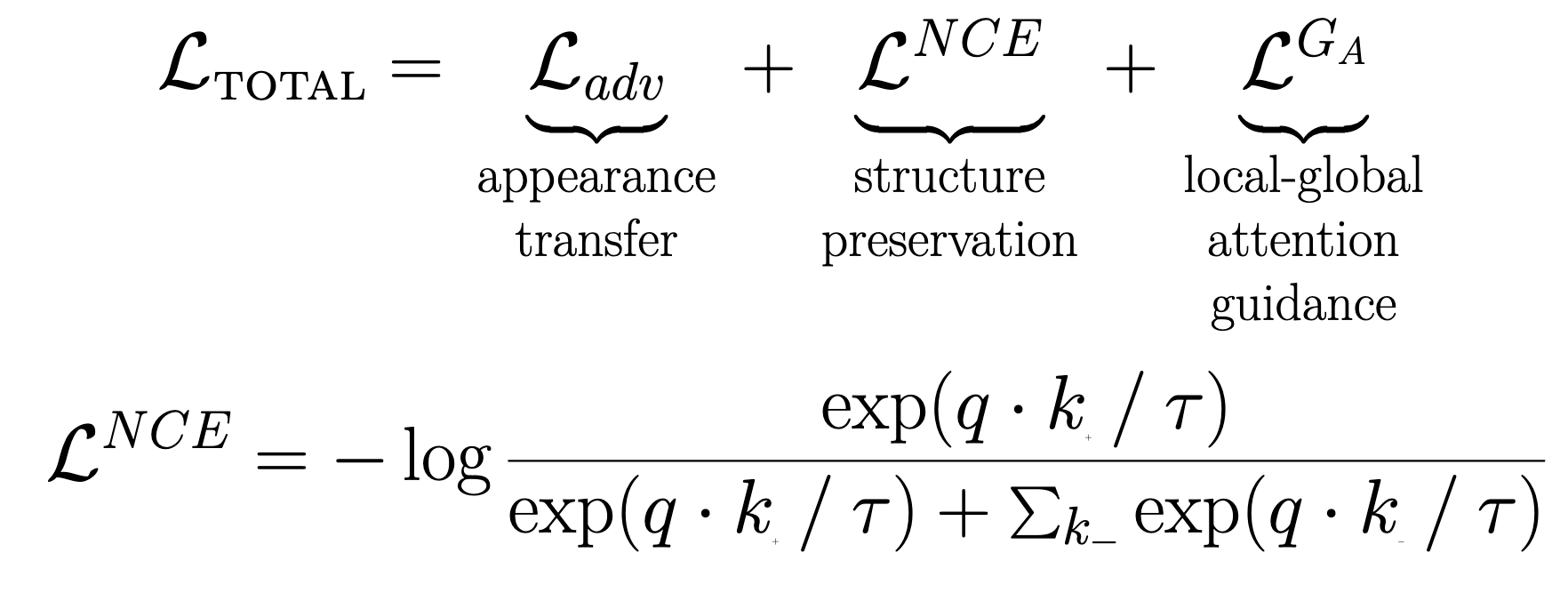

Optimization

- \( \La_{adv} \) adversarial term

- \( \La^{NCE} \) infoNCE loss on input and translated patches

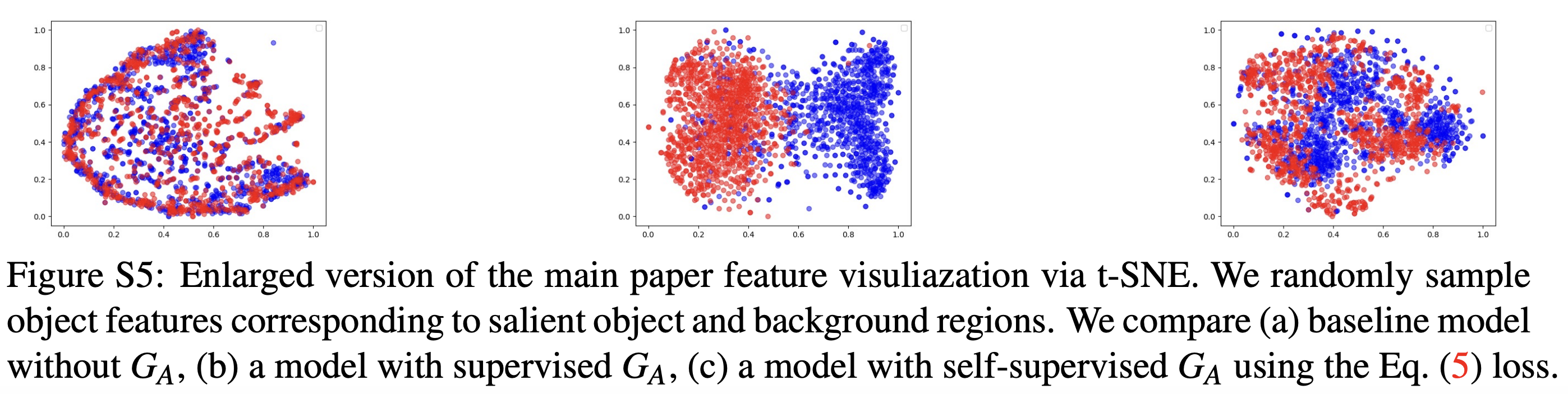

Local-global contrastive learning

Our approach learns to represent objects by contrasting local-global information. We introduce multi-level supervision, directly on \(G_A\) features:

- \( g \rightarrow g \) loss term between \( \mathit{global} \) representations of \(\mathbf{x}\)

- \( l \rightarrow l \) , \( l \rightarrow g \) terms considering \( \mathit{local}-\mathit{local} \) and \( \mathit{local}-\mathit{global} \) representations of \(\mathbf{x}\)

- for network layers \( L \); layer contribution weights \( w_{i} \)

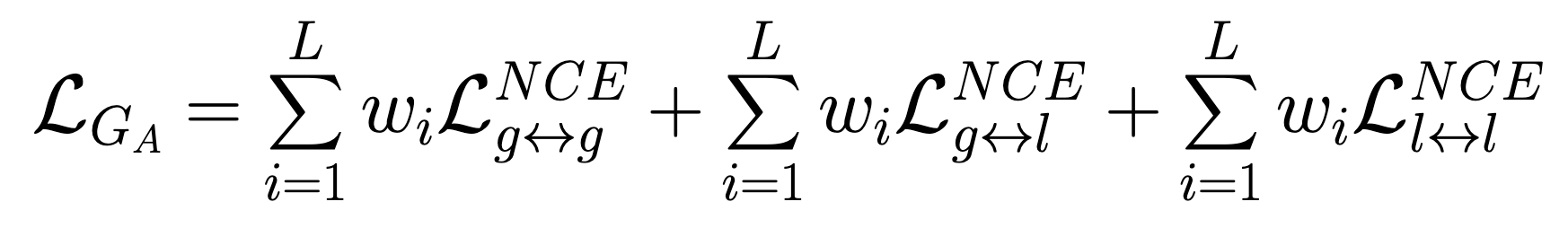

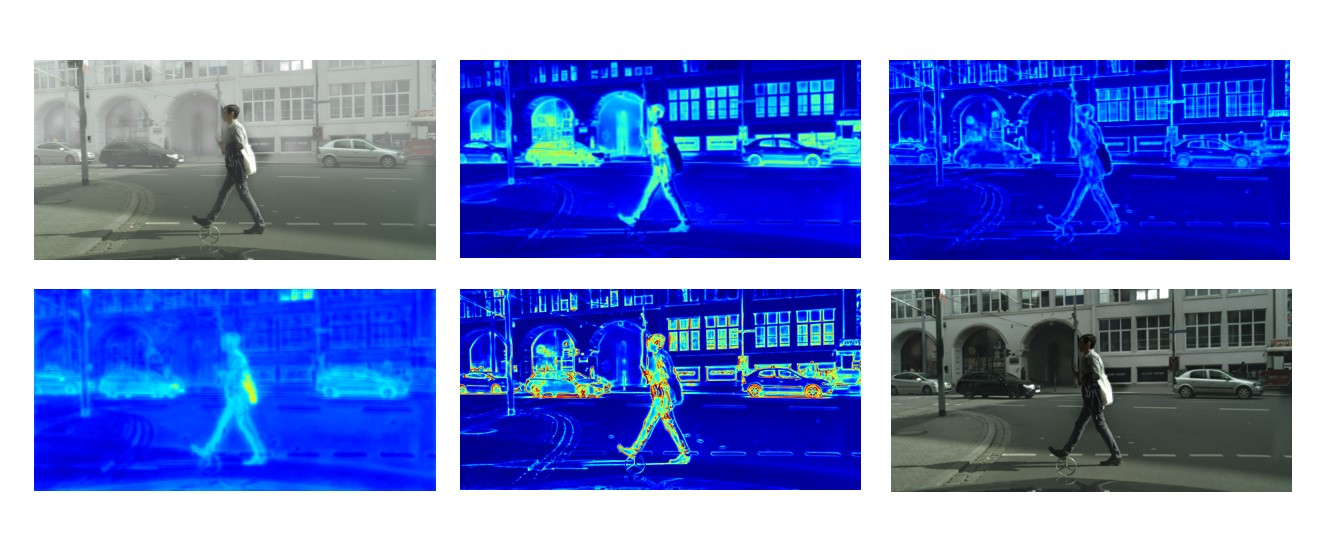

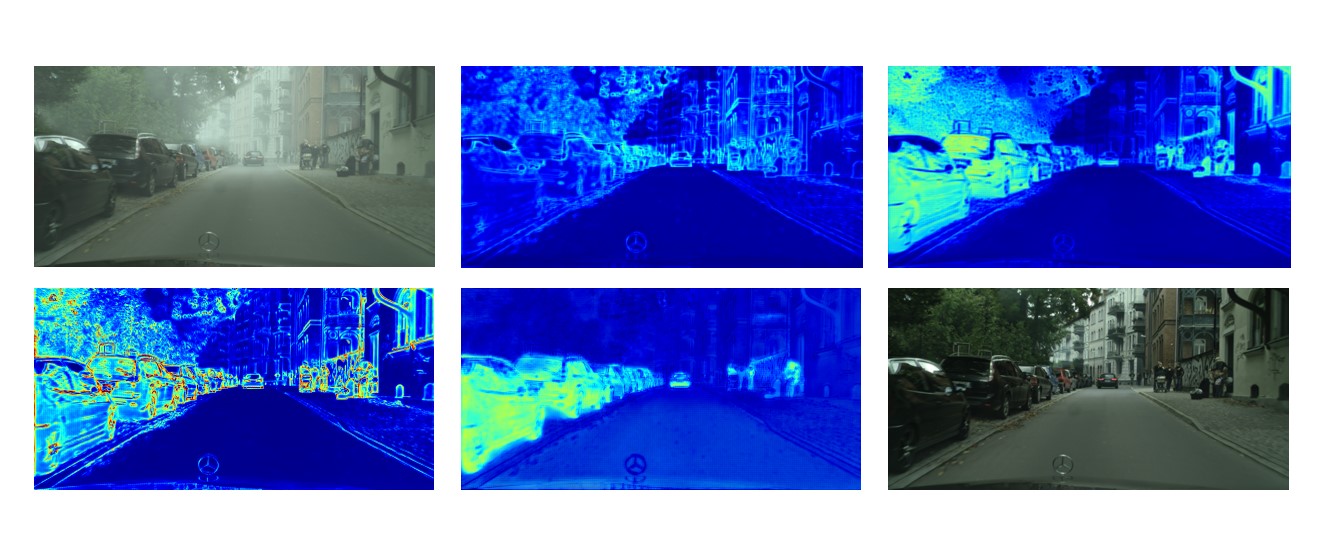

Attention Maps

Experimental results

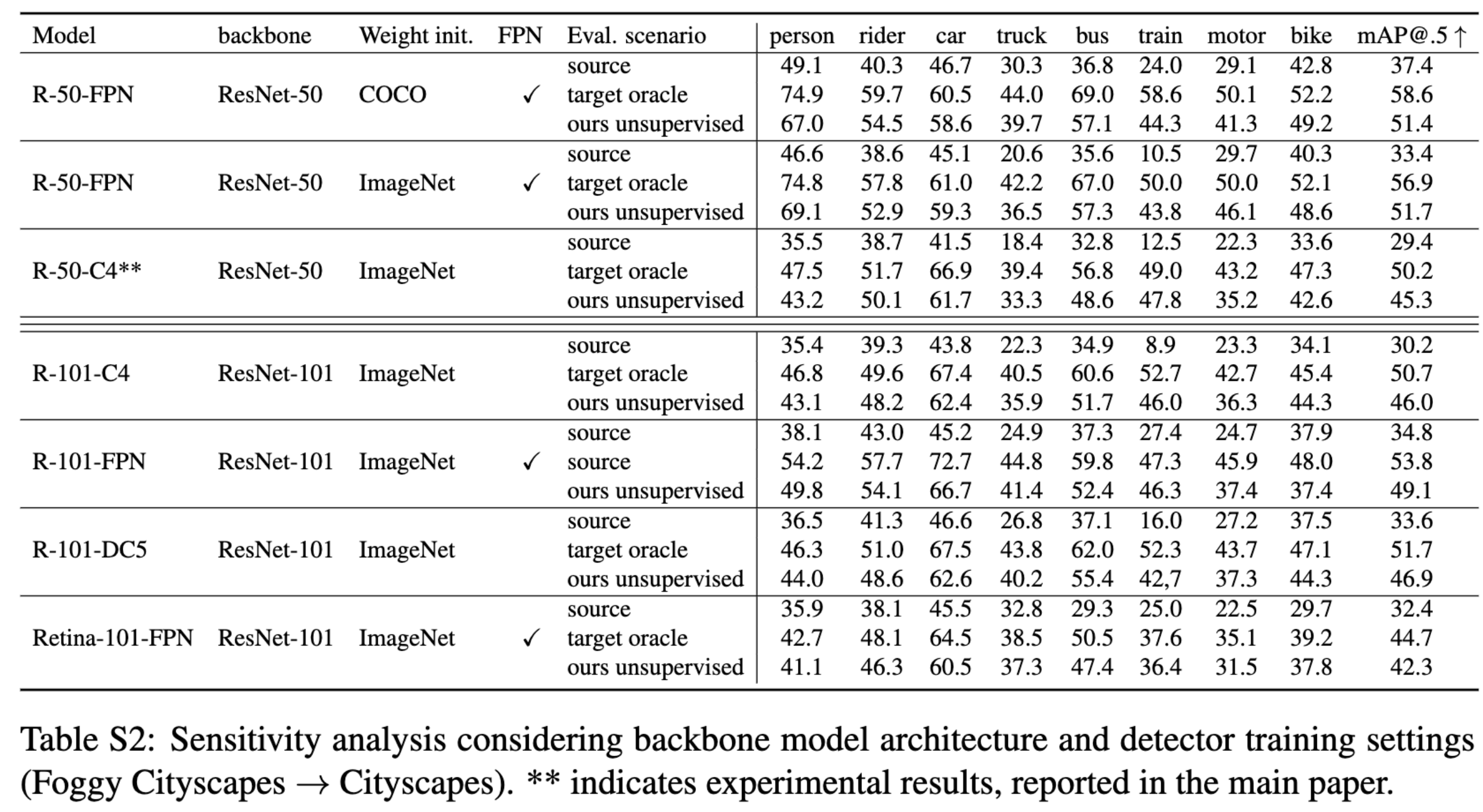

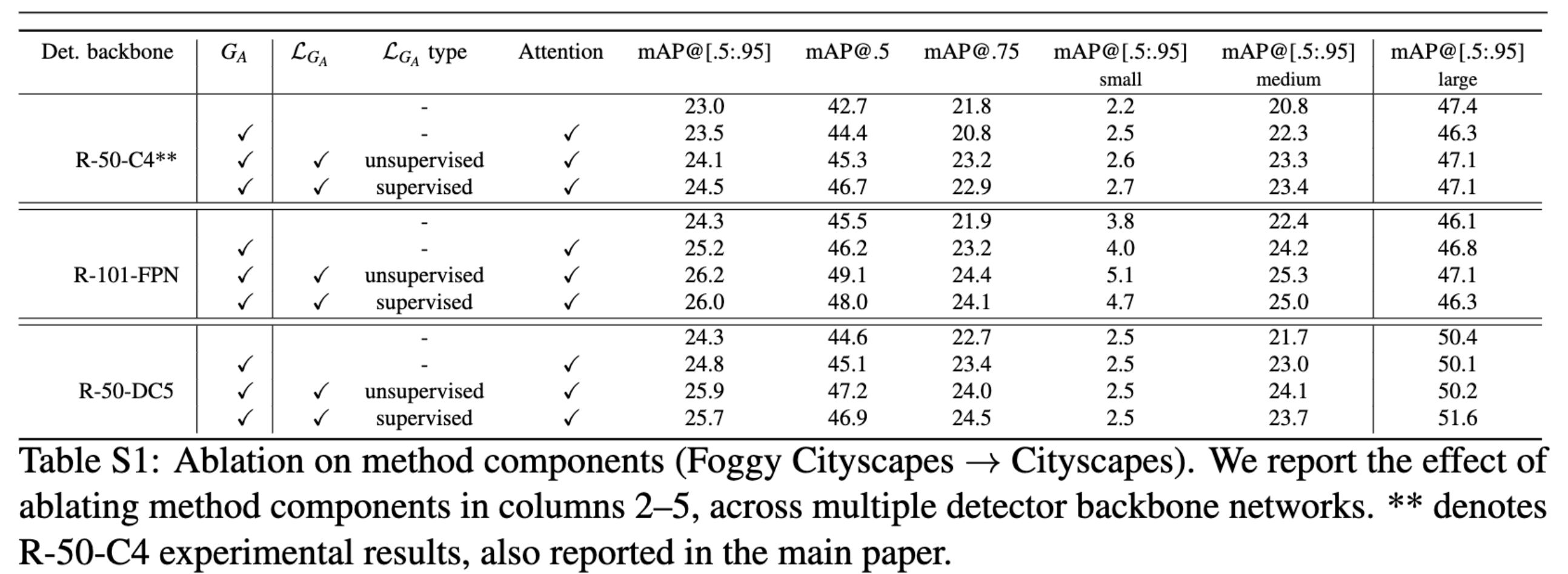

Ablative studies

BibTeX

@article{Triantafyllidou_2024_BMVC,

author = {Danai Triantafyllidou, Sarah Parisot, Ales Leonardis, Steven McDonagh},

title = {Improving Object Detection via Local-global Contrastive Learning},

journal = {BMVC},

year = {2024},

}